Oscar built magic vision for magic the gathering

Oscar created magic vision, a serverless app that allows you to take a photo of a magic card. The image is then analyzed and returns a cleaned up photo, current market value, and a link to buy/sell that card.

Magic Vision

About Me#

Oscar M Romero

I am a Software Engineer. I am fascinated by the creativity and fusion of software and hardware. I love learning, and I am looking for opportunities to learn and grow with others.

The Premise#

So, you decided to learn how to play magic the gathering. You went out, bought all the card packs, and spent over $200. You now have a bunch of duplicates or useless cards. What's worse is that you don't even have a card you want or need to use in a tournament. This year's total payout for the world championship is $250,000 World Championship.

Use the power of Magic Vision! Magic Vision allows you to take a photo of a magic card. The image is then analyzed and returns a cleaned up photo, current market value, and a link to buy/sell that card.

Tools used#

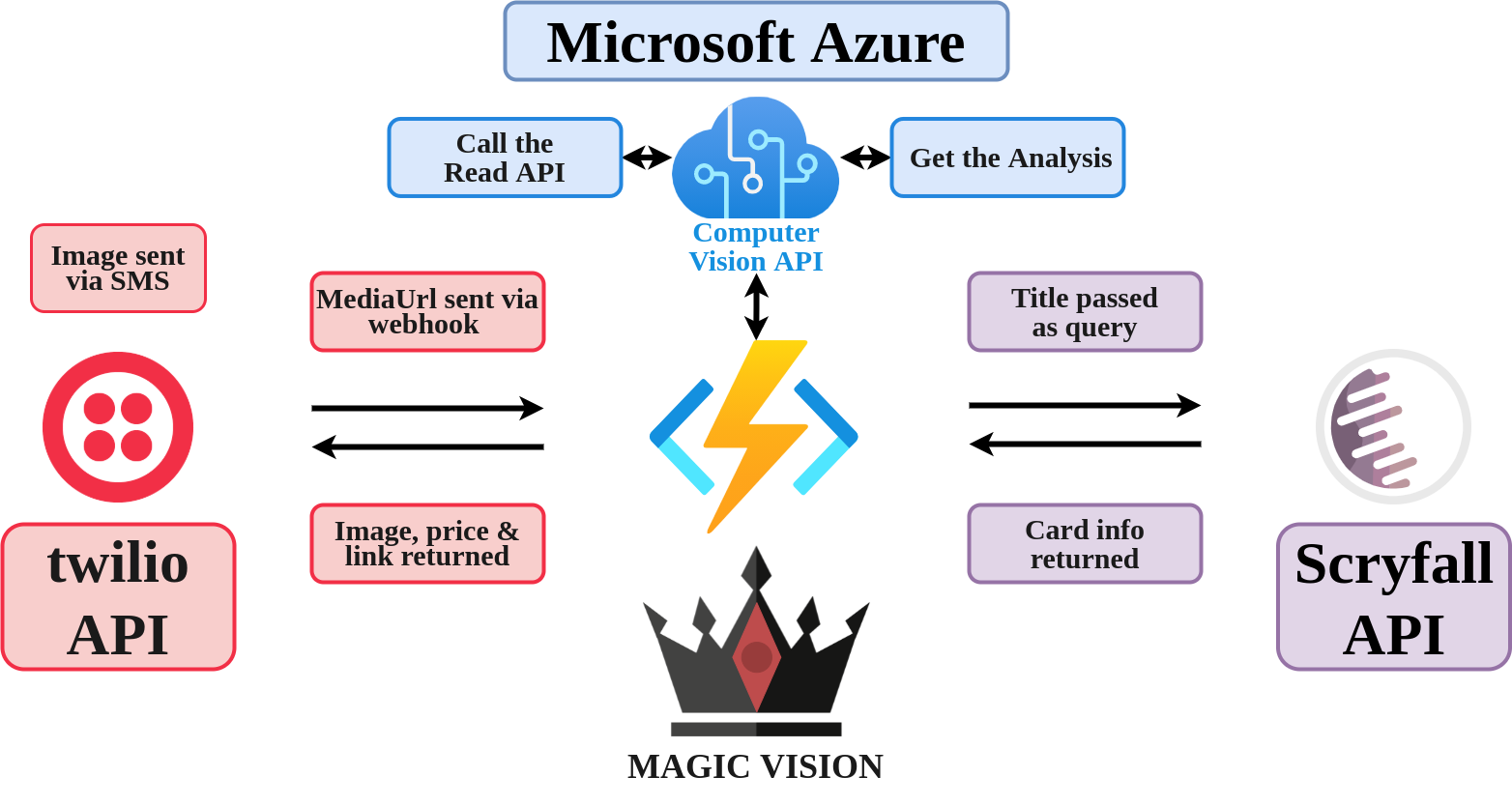

Magic Vision uses Microsoft's Azure Serverless Functions. This allows us to only worry about our application logic and not setting up, configuring, and maintaining our own server.

Magic Vision is built using three main API's

- Which allows you to read text from a submitted image

- Provided a cellular number to call/text other cellular devices and create an interface for your codebase.

- An API service that provided all relevant information about magic the gathering playing cards

The code is written in Node.js and uses npm (node package manager) to install

- node-fetch

- Provide an interface for fetching http resources

- querystring

- Parses a string into a collection of key-value pairs

- twilio

- The helper library to make HTTP requests to the Twilio API

Step by step (with code snippets)#

Lets review the code!

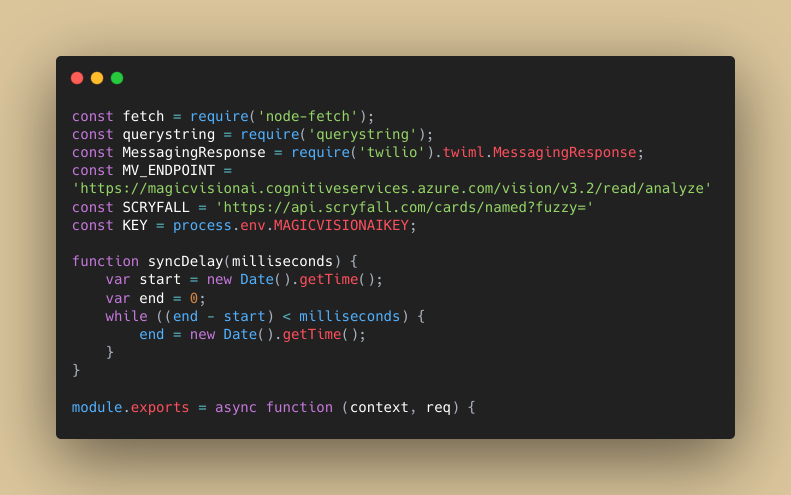

Here we set our constants, any required packages, define our endpoints & key. We also define the function sync delay(milliseconds) which will be used later.

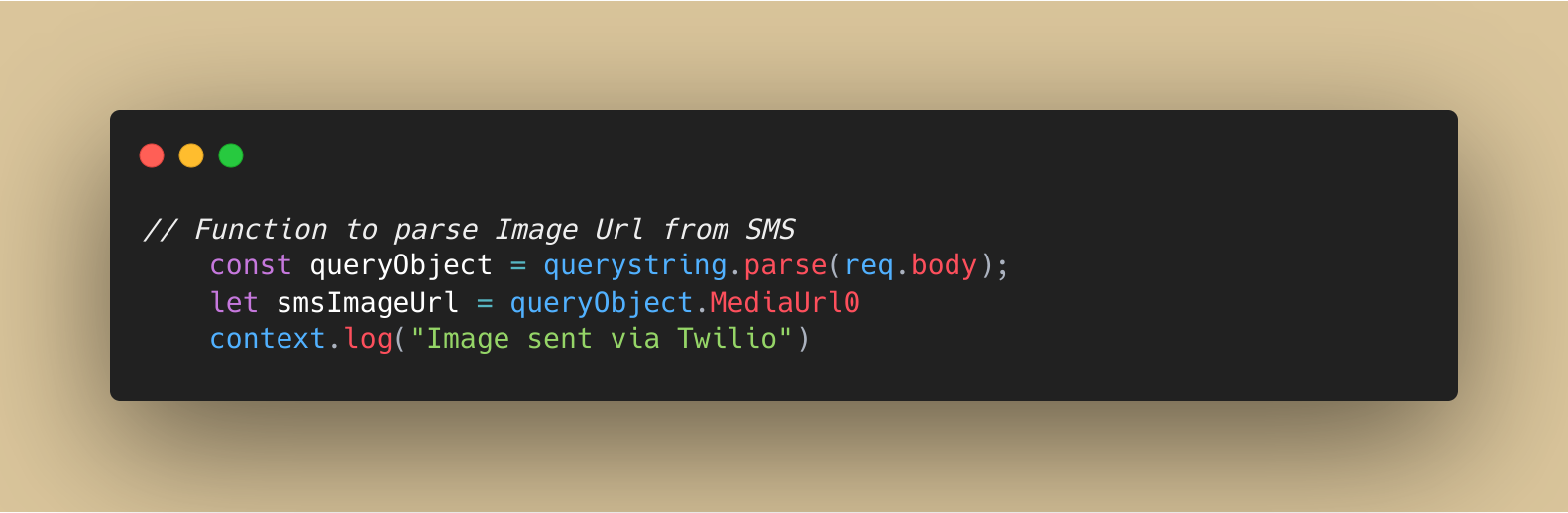

Here we create a query object from the response body sent via webhook from Twilio to our magic vision function. This is created using the package querystring.

After we create the query object we parse out the media url which contains the image sent via text message.

We then output the message Image sent via Twilio.

NOTE: JavaScript uses console.log() to output a message.

Azure Functions uses context.log() to output

messages to the CLI online.

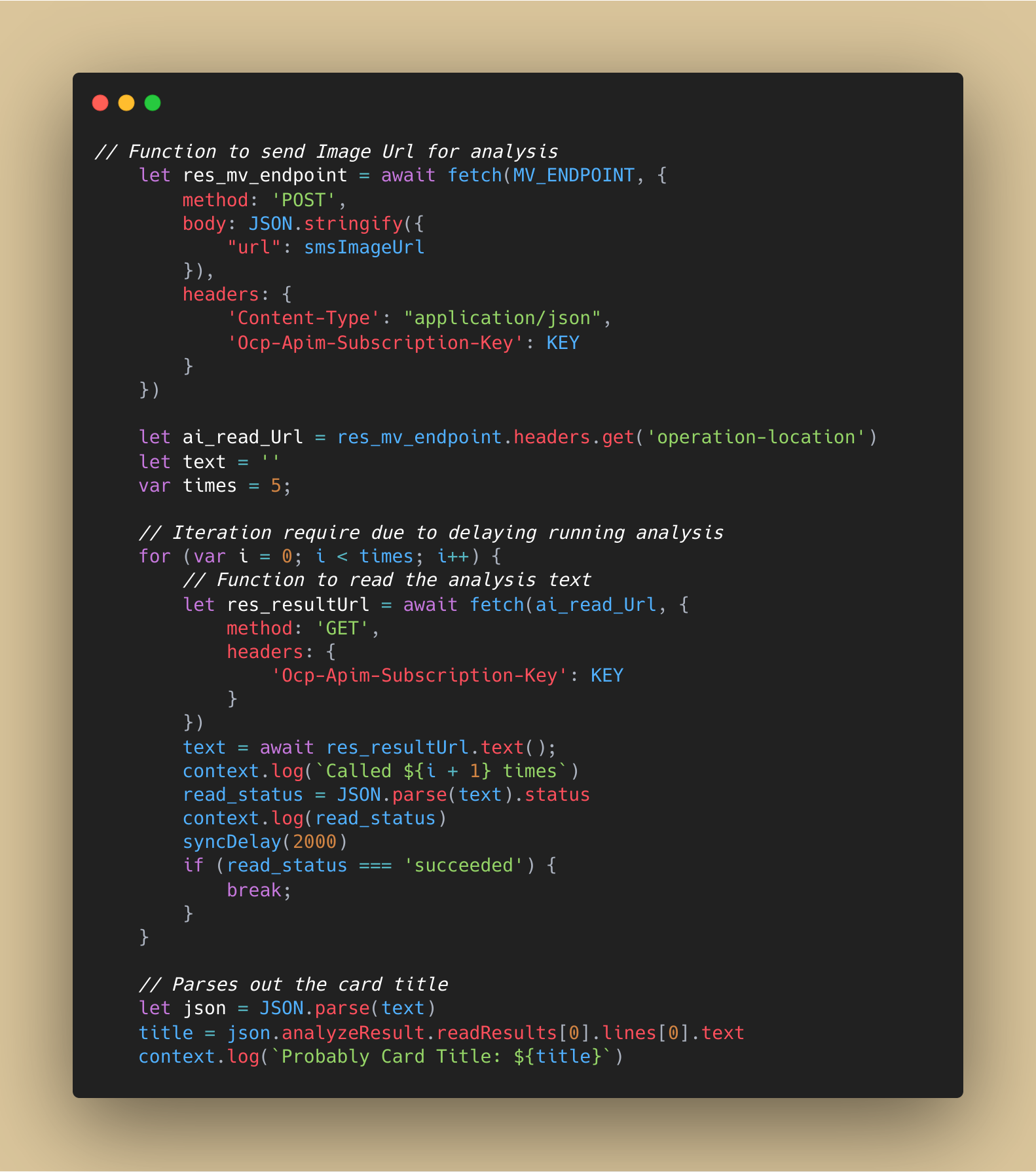

This code section has 3 major parts.

First we call the computer vision API with a POST method using the fetch function. We attach the image url in a JSON string format in the request body. This allows the cognitive service to perform the analysis on the image and recognize any text. We also provide the key to the AI service identifying us as the account owner in the request header.

In the fetch response we select the content-type operation-location. This is the url where the analysis took place. This is assigned to the variable ai_read_url for the second part.

Second, we fetch the computer vision API again with a GET method to return the analysis using the location from the step above. This step requires an iteration because of the time it takes to perform the analysis. This is were the sync delay function comes into play. It tells the system to wait awhile before fetching. It does this 5 times. There is a conditional to break the for loop if the read_Status is successful.

Last, we just parse the returned text with for the approximate card title and output the message Probable Card Title

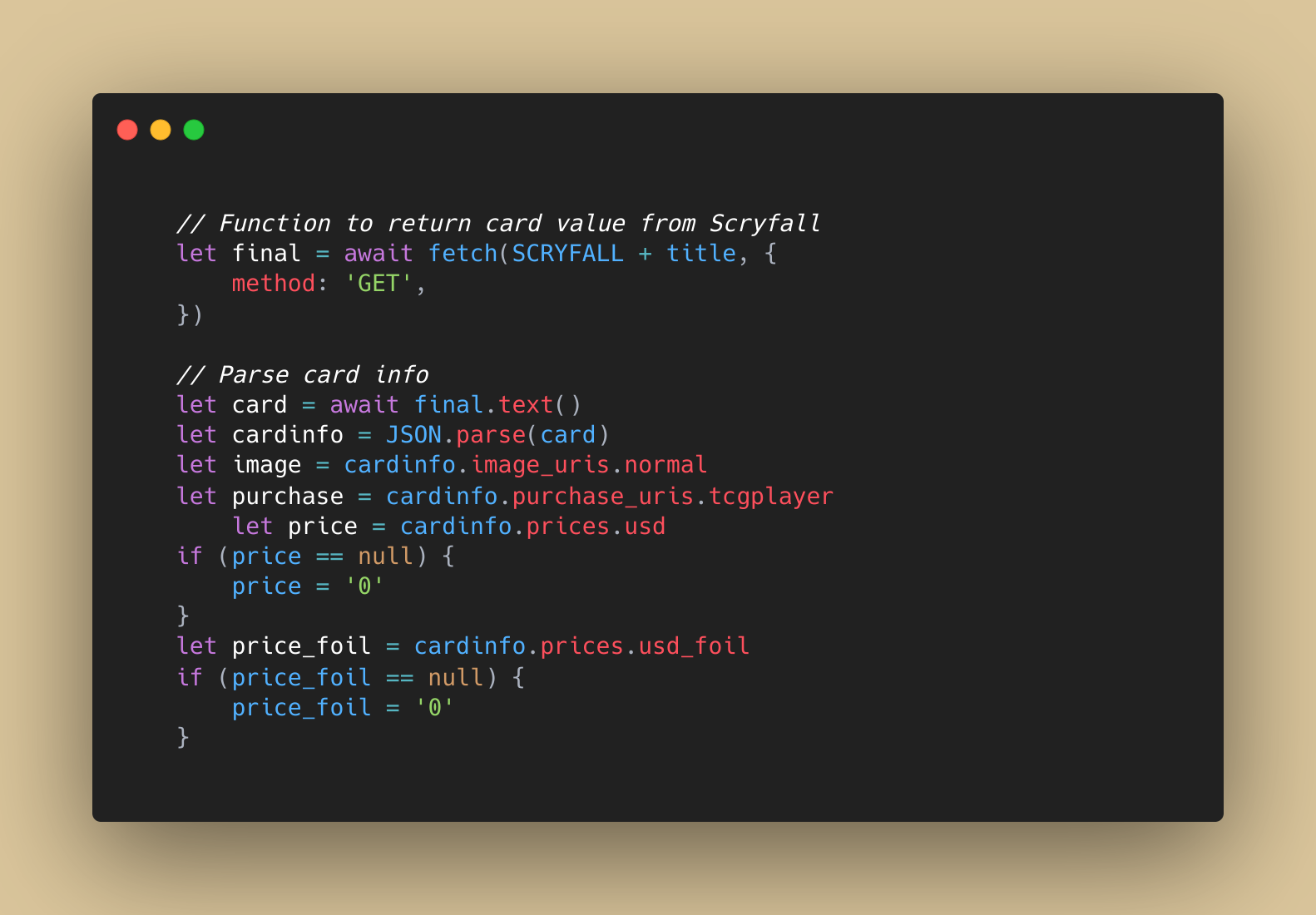

Here we call the Scryfall API with a fetch method. We pass the card title as a query parameter, and we use the keyword fuzzy to return any image that kind of matches the provided text incase the analysis returns text with errors or extra characters.

We return the relevant card information and parse the card image, prices, and a link to purchase the card.

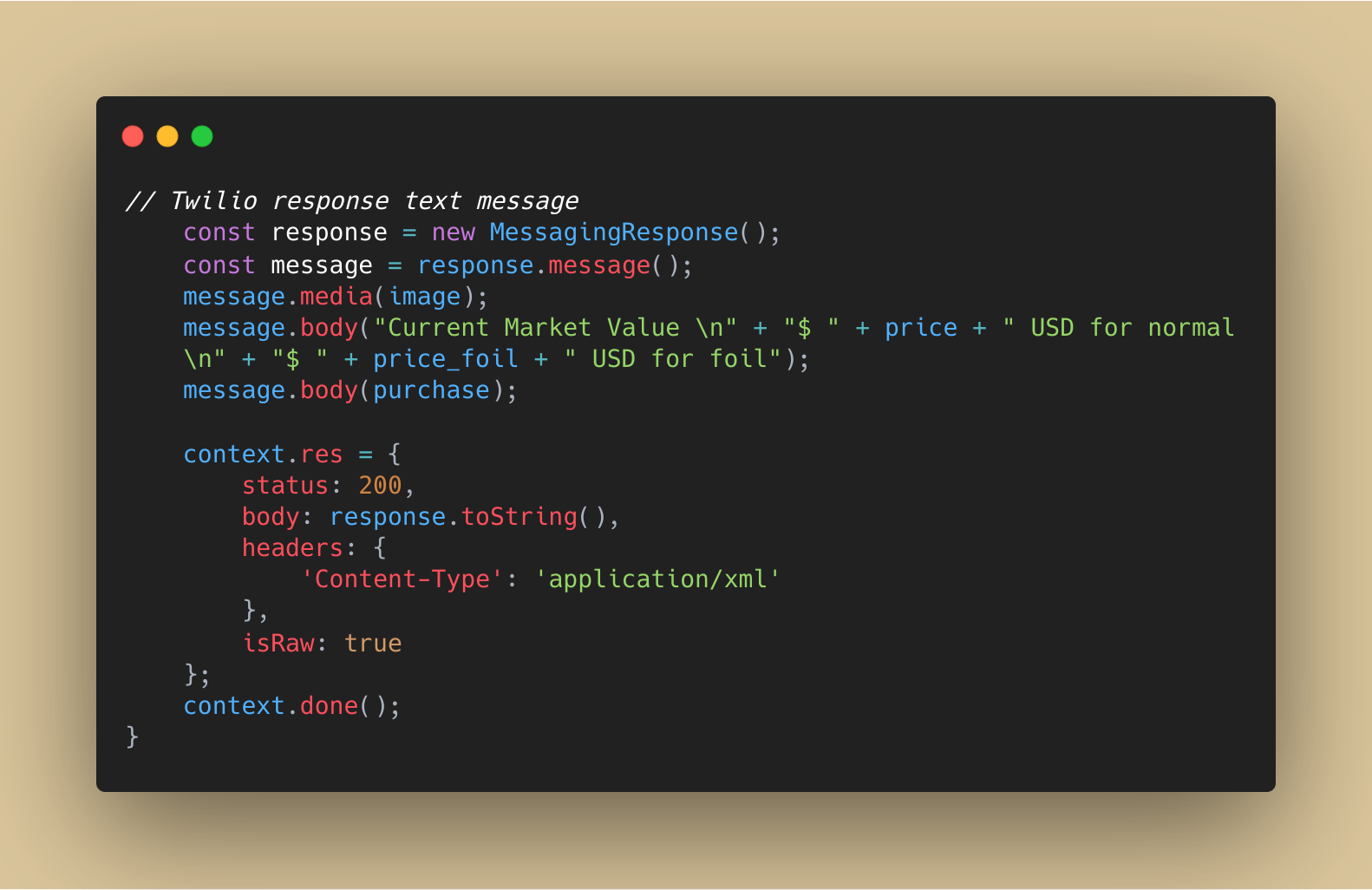

The last thing we do is send a response message to the magic vision number with the appropriate variables passed in. This is accomplished with the twilio package and the Message Response() function.

Frontend Video

Backend Video

Challenges + lessons learned#

One of the biggest issues I had was dealing with returning the result of the image text analysis.

I tested that the API was actually working using Postman. The request was working but in my code it was not. Part of the issue was that the documentation for the computer vision api did not mention that I need to iterate through the API until the analysis was complete. I read that an iteration was required in another document. I informed Microsoft about their document issue with the specific example they gave.

Once I discovered the issue, I tried to implement a solution that created a new promise after fetching the API multiple times. For whatever reason the Twilio message could not deal with the new promise. I want to follow up on this to get a better understanding of using async/await commands and interacting with promises.

The solution I devised uses measure time and delays the command a specific amount of time. In addition, we iterate over this 5 times in case the analysis is not complete the first few times. Lastly we break out of the loop if the text is successfully returned.

Another major learning point is dealing with JSON objects and JSON-like objects to parse information. For the most part it was easy to access the data. However, depending on the response type, I had to find an appropriate method to get the requested information.

The one where I struggled to parse was the operation-location. This was the location of the analysis, and I spent most of a day trying to get that parameter out. I reached out to my peer, Ganning, for help. In the end, just talking it out with him caused me to find the solution.

Thanks and Acknowledgements#

Over all, I learned so much from the Bit Project Serverless Camp. I wish Magic Vision could incorporate everything I learned. However, sometimes it makes more practical sense not to implement a feature in order to use less resources if it will accomplish the same thing.

I also want to thank my mentor, Nočnica Fee, from New Relic. She gave me the best personal and career advice. Her conference public speaking skills are great, and her trust in my skills gave me the confidence I required. She was also extremely confident in my skills which was also an Ego Boost!

Lastly, I wanna thank my peers. I learned so much from asking questions and receiving their responses. Also, when I would help answer their questions, I learned as well. Spending time commiserating over issues is a great bonding experience and it is even better when you finally get the code to work!

Thank you for everything!